This is the fifth in a series of posts about calculating roots without a calculator, with special consideration to how these tales can engage students more deeply with the secondary mathematics curriculum. As most students today have a hard time believing that square roots can be computed without a calculator, hopefully giving them some appreciation for their elders.

Today’s story takes us back to a time before the advent of cheap pocket calculators: 1949.

The following story comes from the chapter “Lucky Numbers” of Surely You’re Joking, Mr. Feynman!, a collection of tales by the late Nobel Prize winning physicist, Richard P. Feynman. Feynman was arguably the greatest American-born physicist — the subject of the excellent biography Genius: The Life and Science of Richard Feynman — and he had a tendency to one-up anyone who tried to one-up him. (He was also a serial philanderer, but that’s another story.) Here’s a story involving how, in the summer of 1949, he calculated ![\sqrt[3]{1729.03}](https://s0.wp.com/latex.php?latex=%5Csqrt%5B3%5D%7B1729.03%7D&bg=ffffff&fg=000000&s=0&c=20201002) without a calculator.

without a calculator.

The first time I was in Brazil I was eating a noon meal at I don’t know what time — I was always in the restaurants at the wrong time — and I was the only customer in the place. I was eating rice with steak (which I loved), and there were about four waiters standing around.

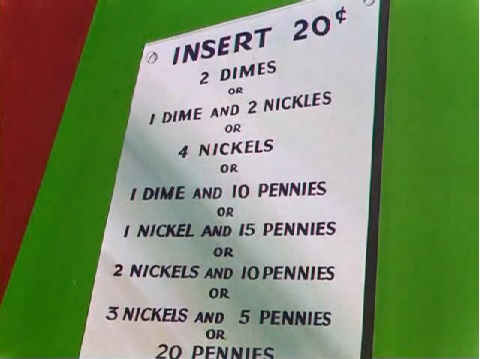

A Japanese man came into the restaurant. I had seen him before, wandering around; he was trying to sell abacuses. (Note: At the time of this story, before the advent of pocket calculators, the abacus was arguably the world’s most powerful hand-held computational device.) He started to talk to the waiters, and challenged them: He said he could add numbers faster than any of them could do.

The waiters didn’t want to lose face, so they said, “Yeah, yeah. Why don’t you go over and challenge the customer over there?”

The man came over. I protested, “But I don’t speak Portuguese well!”

The waiters laughed. “The numbers are easy,” they said.

They brought me a paper and pencil.

The man asked a waiter to call out some numbers to add. He beat me hollow, because while I was writing the numbers down, he was already adding them as he went along.

I suggested that the waiter write down two identical lists of numbers and hand them to us at the same time. It didn’t make much difference. He still beat me by quite a bit.

However, the man got a little bit excited: he wanted to prove himself some more. “Multiplição!” he said.

Somebody wrote down a problem. He beat me again, but not by much, because I’m pretty good at products.

The man then made a mistake: he proposed we go on to division. What he didn’t realize was, the harder the problem, the better chance I had.

We both did a long division problem. It was a tie.

This bothered the hell out of the Japanese man, because he was apparently well trained on the abacus, and here he was almost beaten by this customer in a restaurant.

“Raios cubicos!” he says with a vengeance. Cube roots! He wants to do cube roots by arithmetic. It’s hard to find a more difficult fundamental problem in arithmetic. It must have been his topnotch exercise in abacus-land.

He writes down a number on some paper— any old number— and I still remember it:  . He starts working on it, mumbling and grumbling: “Mmmmmmagmmmmbrrr”— he’s working like a demon! He’s poring away, doing this cube root.

. He starts working on it, mumbling and grumbling: “Mmmmmmagmmmmbrrr”— he’s working like a demon! He’s poring away, doing this cube root.

Meanwhile I’m just sitting there.

One of the waiters says, “What are you doing?”.

I point to my head. “Thinking!” I say. I write down  on the paper. After a little while I’ve got

on the paper. After a little while I’ve got  .

.

The man with the abacus wipes the sweat off his forehead: “Twelve!” he says.

“Oh, no!” I say. “More digits! More digits!” I know that in taking a cube root by arithmetic, each new digit is even more work that the one before. It’s a hard job.

He buries himself again, grunting “Rrrrgrrrrmmmmmm …,” while I add on two more digits. He finally lifts his head to say, “ !”

!”

The waiter are all excited and happy. They tell the man, “Look! He does it only by thinking, and you need an abacus! He’s got more digits!”

He was completely washed out, and left, humiliated. The waiters congratulated each other.

How did the customer beat the abacus?

The number was  . I happened to know that a cubic foot contains

. I happened to know that a cubic foot contains  cubic inches, so the answer is a tiny bit more than

cubic inches, so the answer is a tiny bit more than  . The excess,

. The excess,  , is only one part in nearly

, is only one part in nearly  , and I had learned in calculus that for small fractions, the cube root’s excess is one-third of the number’s excess. So all I had to do is find the fraction

, and I had learned in calculus that for small fractions, the cube root’s excess is one-third of the number’s excess. So all I had to do is find the fraction  , and multiply by

, and multiply by  (divide by

(divide by  and multiply by

and multiply by  ). So I was able to pull out a whole lot of digits that way.

). So I was able to pull out a whole lot of digits that way.

A few weeks later, the man came into the cocktail lounge of the hotel I was staying at. He recognized me and came over. “Tell me,” he said, “how were you able to do that cube-root problem so fast?”

I started to explain that it was an approximate method, and had to do with the percentage of error. “Suppose you had given me  . Now the cube root of

. Now the cube root of  is

is  …”

…”

He picks up his abacus: zzzzzzzzzzzzzzz— “Oh yes,” he says.

I realized something: he doesn’t know numbers. With the abacus, you don’t have to memorize a lot of arithmetic combinations; all you have to do is to learn to push the little beads up and down. You don’t have to memorize 9+7=16; you just know that when you add 9, you push a ten’s bead up and pull a one’s bead down. So we’re slower at basic arithmetic, but we know numbers.

Furthermore, the whole idea of an approximate method was beyond him, even though a cubic root often cannot be computed exactly by any method. So I never could teach him how I did cube roots or explain how lucky I was that he happened to choose  .

.

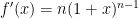

The key part of the story, “for small fractions, the cube root’s excess is one-third of the number’s excess,” deserves some elaboration, especially since this computational trick isn’t often taught in those terms anymore. If  , then

, then  , so that

, so that  . Since

. Since  , the equation of the tangent line to

, the equation of the tangent line to  at

at  is

is

.

.

The key observation is that, for  , the graph of

, the graph of  will be very close indeed to the graph of

will be very close indeed to the graph of  . In Calculus I, this is sometimes called the linearization of

. In Calculus I, this is sometimes called the linearization of  at

at  . In Calculus II, we observe that these are the first two terms in the Taylor series expansion of

. In Calculus II, we observe that these are the first two terms in the Taylor series expansion of  about

about  .

.

For Feynman’s problem,  , so that

, so that ![\sqrt[3]{1+x} \approx 1 + \frac{1}{3} x](https://s0.wp.com/latex.php?latex=%5Csqrt%5B3%5D%7B1%2Bx%7D+%5Capprox+1+%2B+%5Cfrac%7B1%7D%7B3%7D+x&bg=ffffff&fg=000000&s=0&c=20201002) if $x \approx 0$. Then $\latex \sqrt[3]{1729.03}$ can be rewritten as

if $x \approx 0$. Then $\latex \sqrt[3]{1729.03}$ can be rewritten as

![\sqrt[3]{1729.03} = \sqrt[3]{1728} \sqrt[3]{ \displaystyle \frac{1729.03}{1728} }](https://s0.wp.com/latex.php?latex=%5Csqrt%5B3%5D%7B1729.03%7D+%3D+%5Csqrt%5B3%5D%7B1728%7D+%5Csqrt%5B3%5D%7B+%5Cdisplaystyle+%5Cfrac%7B1729.03%7D%7B1728%7D+%7D&bg=ffffff&fg=000000&s=0&c=20201002)

![\sqrt[3]{1729.03} = 12 \sqrt[3]{\displaystyle 1 + \frac{1.03}{1728}}](https://s0.wp.com/latex.php?latex=%5Csqrt%5B3%5D%7B1729.03%7D+%3D+12+%5Csqrt%5B3%5D%7B%5Cdisplaystyle+1+%2B+%5Cfrac%7B1.03%7D%7B1728%7D%7D&bg=ffffff&fg=000000&s=0&c=20201002)

![\sqrt[3]{1729.03} \approx 12 \left( 1 + \displaystyle \frac{1}{3} \times \frac{1.03}{1728} \right)](https://s0.wp.com/latex.php?latex=%5Csqrt%5B3%5D%7B1729.03%7D+%5Capprox+12+%5Cleft%28+1+%2B+%5Cdisplaystyle+%5Cfrac%7B1%7D%7B3%7D+%5Ctimes+%5Cfrac%7B1.03%7D%7B1728%7D+%5Cright%29&bg=ffffff&fg=000000&s=0&c=20201002)

![\sqrt[3]{1729.03} \approx 12 + 4 \times \displaystyle \frac{1.03}{1728}](https://s0.wp.com/latex.php?latex=%5Csqrt%5B3%5D%7B1729.03%7D+%5Capprox+12+%2B+4+%5Ctimes+%5Cdisplaystyle+%5Cfrac%7B1.03%7D%7B1728%7D&bg=ffffff&fg=000000&s=0&c=20201002)

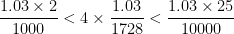

This last equation explains the line “all I had to do is find the fraction  , and multiply by

, and multiply by  .” With enough patience, the first few digits of the correction can be mentally computed since

.” With enough patience, the first few digits of the correction can be mentally computed since

So Feynman could determine quickly that the answer was  .

.

By the way,

![\sqrt[3]{1729.03} \approx 12.00238378\dots](https://s0.wp.com/latex.php?latex=%5Csqrt%5B3%5D%7B1729.03%7D+%5Capprox+12.00238378%5Cdots&bg=ffffff&fg=000000&s=0&c=20201002)

So the linearization provides an estimate accurate to eight significant digits. Additional digits could be obtained by using the next term in the Taylor series.

I have a similar story to tell. Back in 1996 or 1997, when I first moved to Texas and was making new friends, I quickly discovered that one way to get odd facial expressions out of strangers was by mentioning that I was a math professor. Occasionally, however, someone would test me to see if I really was a math professor. One guy (who is now a good friend; later, we played in the infield together on our church-league softball team) asked me to figure out  without a calculator — before someone could walk to the next room and return with the calculator. After two seconds of panic, I realized that I was really lucky that he happened to pick a number close to

without a calculator — before someone could walk to the next room and return with the calculator. After two seconds of panic, I realized that I was really lucky that he happened to pick a number close to  . Using the same logic as above,

. Using the same logic as above,

.

.

Knowing that this came from a linearization and that the tangent line to  lies above the curve, I knew that this estimate was too high. But I didn’t have time to work out a correction (besides, I couldn’t remember the full Taylor series off the top of my head), so I answered/guessed

lies above the curve, I knew that this estimate was too high. But I didn’t have time to work out a correction (besides, I couldn’t remember the full Taylor series off the top of my head), so I answered/guessed  , hoping that I did the arithmetic correctly. You can imagine the amazement when someone punched into the calculator to get

, hoping that I did the arithmetic correctly. You can imagine the amazement when someone punched into the calculator to get

th derivative of the original function, and higher-order derivatives usually get progressively messier with each successive differentiation.

,

,

, and

.

. Successively using the Quotient Rule makes the derivatives of

messier and messier, but

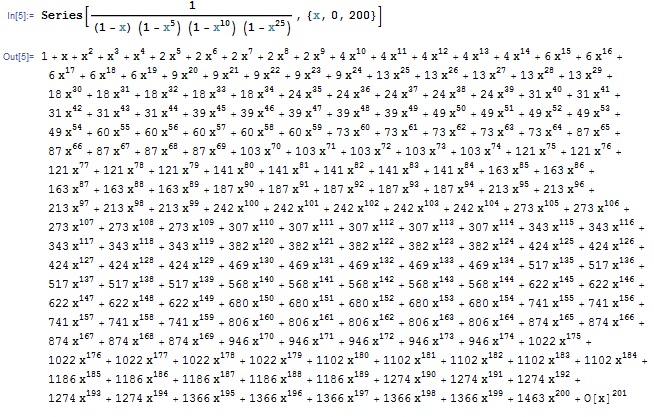

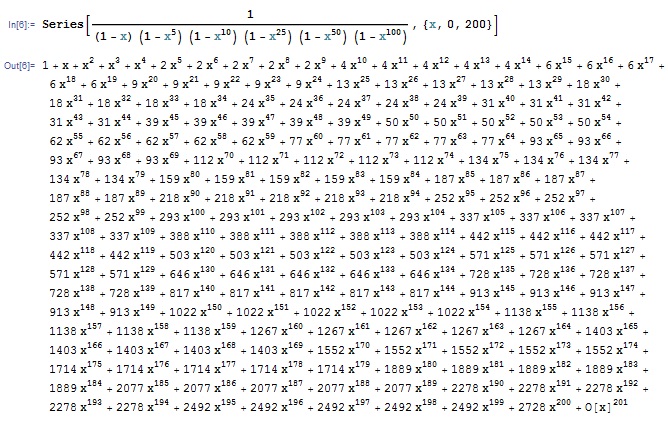

definitely qualifies as an easy function that most students have seen since high school. It turns out that the Taylor expansion of

can be written as an infinite series using the Bernoulli numbers, but that’s a concept that most calculus students haven’t seen yet.