In this series, I’m discussing how ideas from calculus and precalculus (with a touch of differential equations) can predict the precession in Mercury’s orbit and thus confirm Einstein’s theory of general relativity. The origins of this series came from a class project that I assigned to my Differential Equations students maybe 20 years ago.

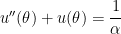

We previously showed that if the motion of a planet around the Sun is expressed in polar coordinates  , with the Sun at the origin, then under Newtonian mechanics (i.e., without general relativity) the motion of the planet follows the differential equation

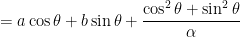

, with the Sun at the origin, then under Newtonian mechanics (i.e., without general relativity) the motion of the planet follows the differential equation

,

,

where  and

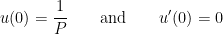

and  is a certain constant. We will also impose the initial condition that the planet is at perihelion (i.e., is closest to the sun), at a distance of

is a certain constant. We will also impose the initial condition that the planet is at perihelion (i.e., is closest to the sun), at a distance of  , when

, when  . This means that

. This means that  obtains its maximum value of

obtains its maximum value of  when

when  . This leads to the two initial conditions

. This leads to the two initial conditions

;

;

the second equation arises since  has a local extremum at

has a local extremum at  .

.

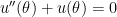

We now take the perspective of a student who is taking a first-semester course in differential equations. There are two standard techniques for solving a second-order non-homogeneous differential equations with constant coefficients. One of these is the method of variation of parameters. First, we solve the associated homogeneous differential equation

.

.

The characteristic equation of this differential equation is  , which clearly has the two imaginary roots

, which clearly has the two imaginary roots  . Therefore, two linearly independent solutions of the associated homogeneous equation are

. Therefore, two linearly independent solutions of the associated homogeneous equation are  and

and  .

.

(As an aside, this is one answer to the common question, “What are complex numbers good for?” The answer is naturally above the heads of Algebra II students when they first encounter the mysterious number  , but complex numbers provide a way of solving the differential equations that model multiple problems in statics and dynamics.)

, but complex numbers provide a way of solving the differential equations that model multiple problems in statics and dynamics.)

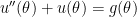

According to the method of variation of parameters, the general solution of the original nonhomogeneous differential equation

is

,

,

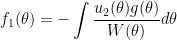

where

,

,

,

,

and  is the Wronskian of

is the Wronskian of  and

and  , defined by the determinant

, defined by the determinant

.

.

Well, that’s a mouthful.

Fortunately, for the example at hand, these computations are pretty easy. First, since  and

and  , we have

, we have

from the usual Pythagorean trigonometric identity. Therefore, the denominators in the integrals for  and

and  essentially disappear.

essentially disappear.

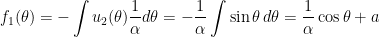

Since  , the integrals for

, the integrals for  and

and  are straightforward to compute:

are straightforward to compute:

,

,

where we use  for the constant of integration instead of the usual

for the constant of integration instead of the usual  . Second,

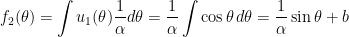

. Second,

,

,

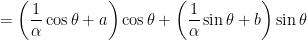

using  for the constant of integration. Therefore, by variation of parameters, the general solution of the nonhomogeneous differential equation is

for the constant of integration. Therefore, by variation of parameters, the general solution of the nonhomogeneous differential equation is

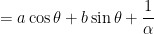

.

.

Unsurprisingly, this matches the answer in the previous post that was found by the method of undetermined coefficients.

For the sake of completeness, I repeat the argument used in the previous two posts to determine  and

and  . This is require using the initial conditions

. This is require using the initial conditions  and

and  . From the first initial condition,

. From the first initial condition,

From the second initial condition,

.

.

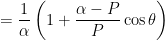

From these two constants, we obtain

,

,

where  .

.

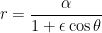

Finally, since  , we see that the planet’s orbit satisfies

, we see that the planet’s orbit satisfies

,

,

so that, as shown earlier in this series, the orbit is an ellipse with eccentricity  .

.

,

is

.

is a positive integer. To begin, the right-hand side is

.

.

.

term of the second series, the right-hand side becomes:

= e^{-z} z^{a-1}$

.

.