At long last, we have reached the end of this series of posts.

The derivation is elementary; I’m confident that I could have understood this derivation had I seen it when I was in high school. That said, the word “elementary” in mathematics can be a bit loaded — this means that it is based on simple ideas that are perhaps used in a profound and surprising way. Perhaps my favorite quote along these lines was this understated gem from the book Three Pearls of Number Theory after the conclusion of a very complicated proof in Chapter 1:

You see how complicated an entirely elementary construction can sometimes be. And yet this is not an extreme case; in the next chapter you will encounter just as elementary a construction which is considerably more complicated.

Here are the elementary ideas from calculus, precalculus, and high school physics that were used in this series:

- Physics

- Conservation of angular momentum

- Newton’s Second Law

- Newton’s Law of Gravitation

- Precalculus

- Completing the square

- Quadratic formula

- Factoring polynomials

- Complex roots of polynomials

- Bounds on

and

- Period of

and

- Zeroes of

and

- Trigonometric identities (Pythagorean, sum and difference, double-angle)

- Conic sections

- Graphing in polar coordinates

- Two-dimensional vectors

- Dot products of two-dimensional vectors (especially perpendicular vectors)

- Euler’s equation

- Calculus

- The Chain Rule

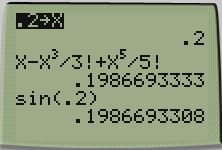

- Derivatives of

and

- Linearizations of

,

, and

near

(or, more generally, their Taylor series approximations)

- Derivative of

- Solving initial-value problems

- Integration by

substitution

While these ideas from calculus are elementary, they were certainly used in clever and unusual ways throughout the derivation.

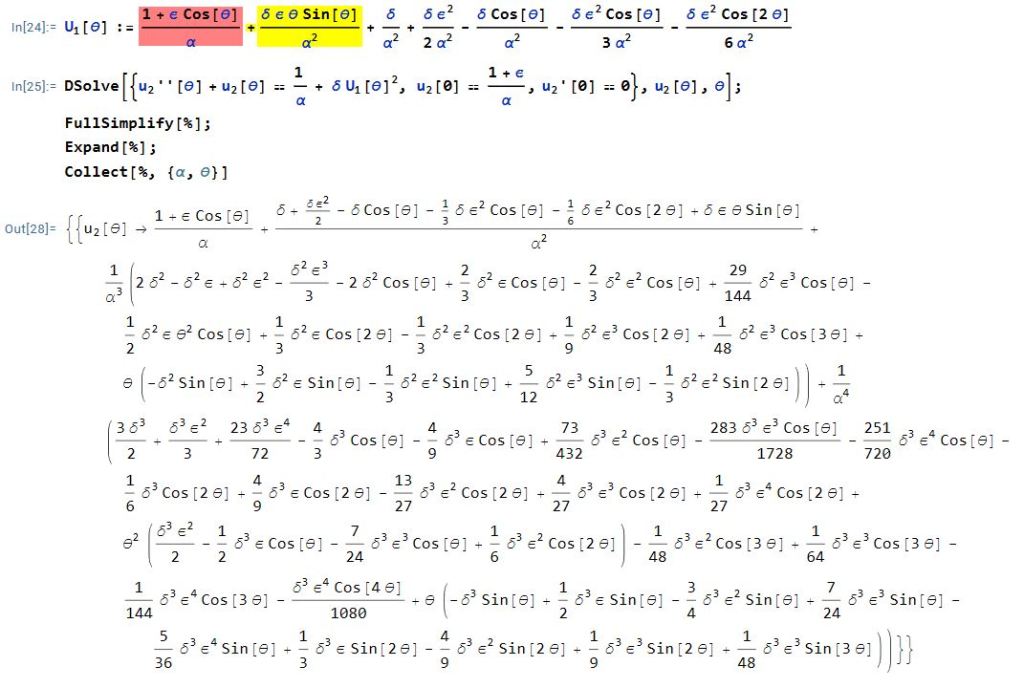

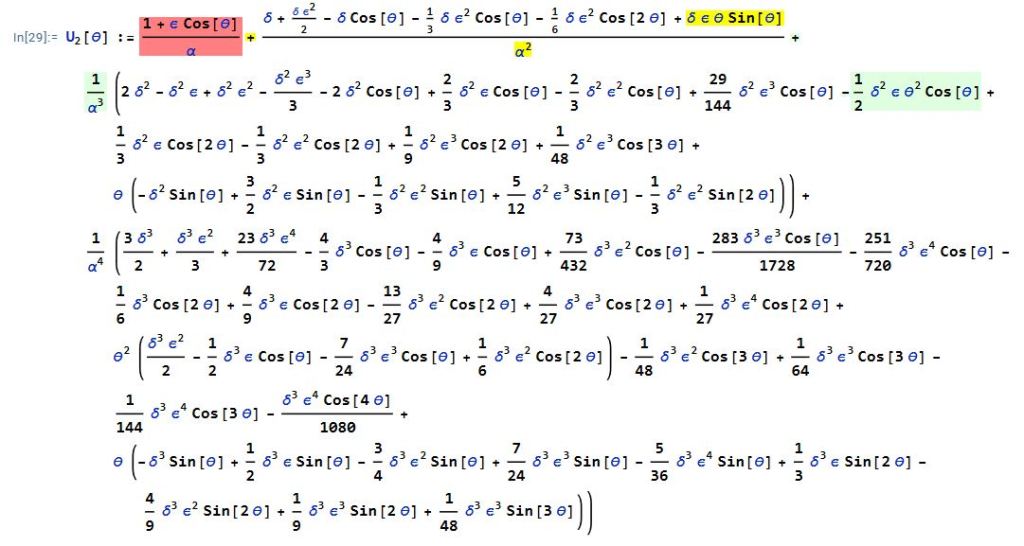

I should add that although the derivation was elementary, certain parts of the derivation could be made easier by appealing to standard concepts from differential equations.

One more thought. While this series of post was inspired by a calculation that appeared in an undergraduate physics textbook, I had thought that this series might be worthy of publication in a mathematical journal as an historical example of an important problem that can be solved by elementary tools. Unfortunately for me, Hieu D. Nguyen’s terrific article Rearing Its Ugly Head: The Cosmological Constant and Newton’s Greatest Blunder in The American Mathematical Monthly is already in the record.